Introduction to Agentic AI: The Dawn of Autonomous Digital Co-Workers and a Paradigm Shift in 2025

The year 2025 marks a pivotal moment in the evolution of artificial intelligence, a period where the buzzword is not just AI, but specifically Agentic AI. This is not merely an incremental update to existing AI models; it represents a fundamental paradigm shift in how we conceive, build, and interact with intelligent systems. Agentic AI, at its core, refers to AI systems, often powered by advanced Large Language Models (LLMs) and other sophisticated machine learning techniques, that possess the capability to perceive their environment, make autonomous decisions, formulate plans, and take actions to achieve specific goals with minimal human intervention. For instance, Stanford researchers have developed ‘generative agents’ that simulate believable humanlike behavior using LLMs—showcasing how autonomous systems can interact dynamically in complex environments. Think of them less as passive tools waiting for commands and more as proactive, goal-oriented digital co-workers, researchers, assistants, or even specialized task forces capable of navigating complex digital (and increasingly, physical) landscapes.

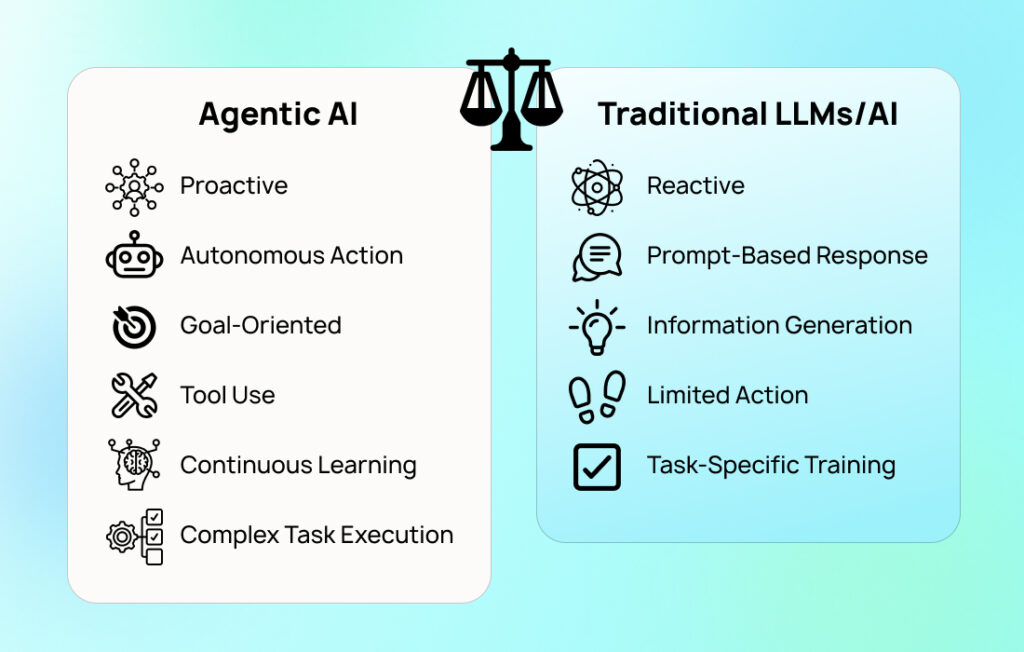

For decades, AI development has progressed through various stages. We’ve seen rule-based systems that meticulously follow predefined instructions, statistical models that excel at pattern recognition and prediction, and more recently, generative AI like LLMs (think ChatGPT, Claude, Gemini) that have astounded us with their ability to understand and produce human-like text, images, and code. These generative models are incredibly powerful as information processors and content creators. However, their primary mode of operation is reactive – they respond to prompts and queries. You ask a question, they provide an answer. You give an instruction, they generate content.

Agentic AI takes this a crucial step further. It endows these powerful AI brains, often LLMs, with agency – the capacity to act independently and purposefully. An agentic system doesn’t just answer your question about the best way to launch a marketing campaign; it can devise the campaign strategy, draft the email copy, schedule the social media posts, monitor the engagement, and adjust the plan based on real-time results. This transition from a responsive information provider to an autonomous action-taker is the hallmark of the agentic revolution. It’s the difference between having a brilliant research assistant who can find any information you ask for, and having a project lead who can take your high-level objective and manage the entire project to completion.

The implications of this shift are profound. In 2025, businesses are not just asking, “What can AI tell me?” but “What can AI do for me, autonomously?” The rise of agentic AI is driven by several converging factors: the increasing sophistication of LLMs providing robust reasoning and natural language understanding capabilities; the development of frameworks (like LangChain, Auto-GPT, CrewAI) that provide the scaffolding for agentic behavior (planning, tool use, memory); the proliferation of APIs allowing agents to interact with a vast array of digital services; and the growing demand for more efficient, scalable, and intelligent automation solutions across all industries. For background, read our overview on SaaS and cloud computing integration.

Consider the sheer volume of digital tasks and information flows in modern life and business. Managing these effectively often requires sifting through data, making decisions, and executing sequences of actions across multiple platforms. Agentic AI offers the promise of automating not just the individual steps, but the entire intelligent workflow. This is why it’s being hailed as a significant leap towards more practical and impactful AI applications. It’s about moving from AI that can write a plan to AI that can execute the plan, learn from the execution, and adapt. This deep dive will explore what constitutes these autonomous AI agents, how they function, their diverse applications in 2025, the tools enabling their creation, their benefits, their inherent limitations, and the exciting, albeit challenging, future they herald.

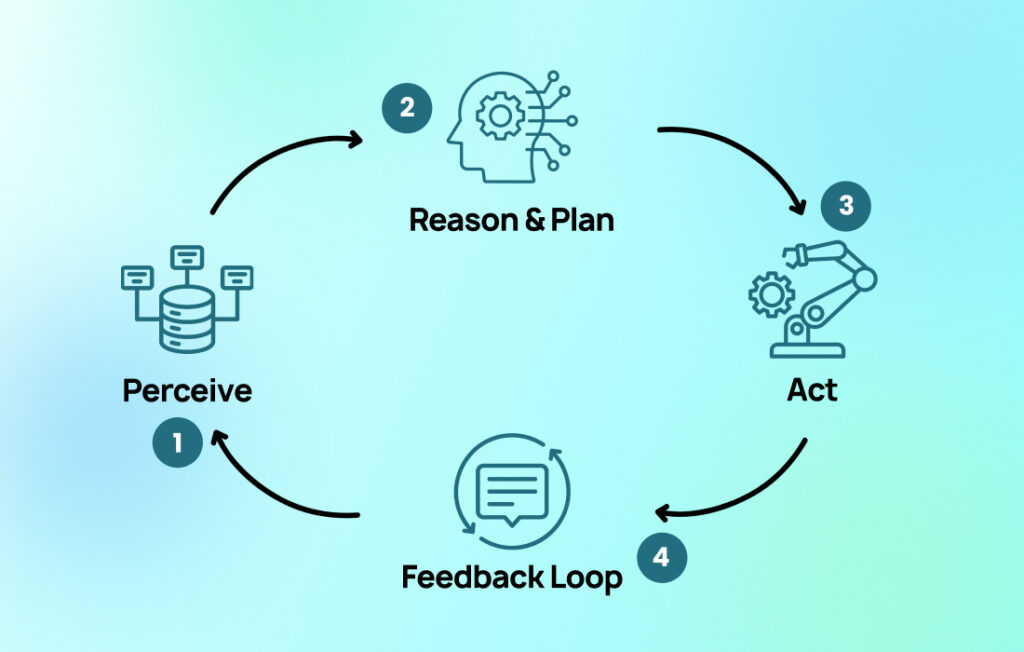

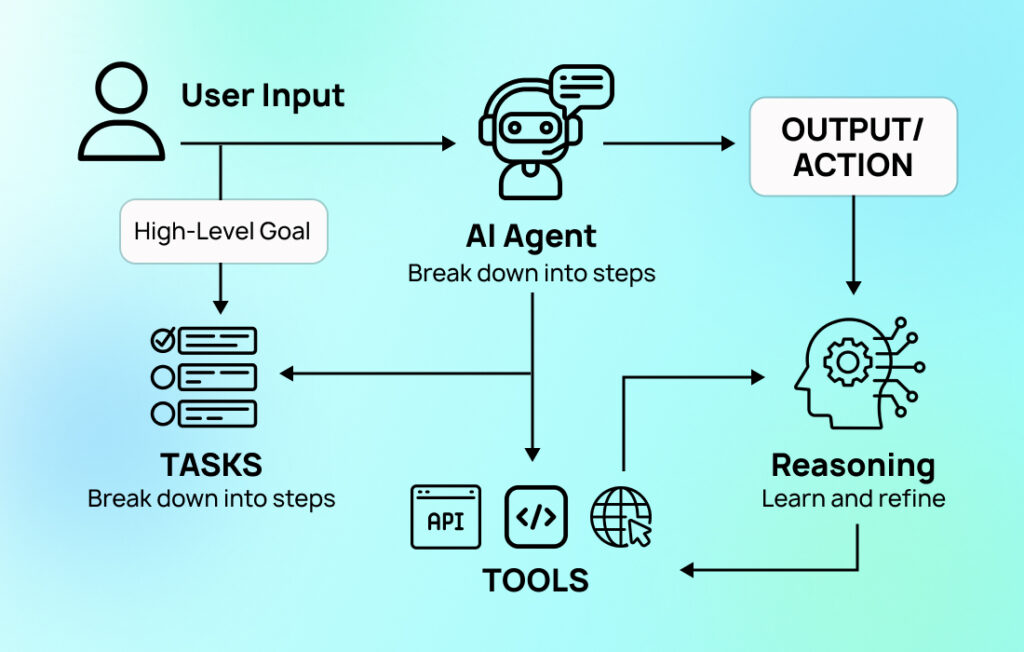

How Autonomous AI Agents Work: The Core Loop of Perception, Reasoning, Planning, and Action

Understanding agentic AI requires a look under the hood at the fundamental operational loop that defines their autonomous behavior. While the specific implementations can vary greatly depending on the complexity of the agent and its purpose, most autonomous AI agents in 2025 operate on a cyclical process involving perception, reasoning (often powered by an LLM), planning, and action. This loop allows them to interact with their environment, make sense of information, decide on a course of action, and then execute it, learning and adapting as they go.

1. Perception: Sensing the Environment

An agent’s first step is to perceive its environment. This “environment” can be purely digital (e.g., websites, databases, APIs, text documents, code repositories) or, in the case of embodied agents like robots, the physical world (through cameras, microphones, lidar, tactile sensors, etc.).

- Data Ingestion: For digital agents, perception involves ingesting data in various forms – reading text from a webpage, querying a database, receiving data from an API, analyzing the content of a file, or processing user input in natural language.

- Multimodal Understanding: Increasingly, agents are equipped with multimodal capabilities, allowing them to perceive and interpret not just text but also images, audio, and video. For example, an agent tasked with monitoring social media might analyze the text of posts, the images shared, and even the sentiment expressed in video clips.

- State Representation: The agent needs to convert these raw sensory inputs into an internal representation of the current state of the environment relevant to its goals. This might involve parsing data, extracting key entities and relationships, and updating its internal knowledge base.

2. Reasoning & Decision Making: The LLM as the Brain

Once the agent has perceived its environment and updated its understanding of the current state, it needs to decide what to do next. This is where the “brain” of the agent, often a Large Language Model (LLM), comes into play.

- Goal Orientation: The agent operates with one or more predefined goals (e.g., “Find the best Italian restaurants in San Francisco,” “Summarize the latest research on quantum computing,” “Book a flight from New York to London for next Tuesday”).

- LLM-Powered Deliberation: The current state information, along with the agent’s goals and its available tools (see below), is typically fed into an LLM. OpenAI’s Deep Research project demonstrates how LLM-powered agents autonomously research complex topics over multiple steps. The LLM is prompted to reason about the situation, evaluate potential next steps, and decide on the most appropriate action to take to move closer to its goal. This might involve breaking down a complex goal into smaller, manageable sub-goals.

- Contextual Understanding and Memory: Effective reasoning requires the agent to maintain context. This includes short-term memory (e.g., the history of recent actions and observations) and potentially long-term memory (e.g., knowledge learned from past tasks, user preferences). Vector databases are often used to store and retrieve relevant information for the LLM to consider during its reasoning process.

- Example Prompt for LLM (Simplified): “My goal is to book a flight. I have already searched for flights and found options A, B, and C. Option A is cheapest but has a long layover. Option B is more expensive but direct. Option C is mid-priced with a short layover. My user prefers a balance of cost and convenience. What should I do next? Available tools: select_flight(option_details), ask_user_for_clarification(question).”

3. Planning: Charting the Course

For more complex tasks, the reasoning phase might lead to the creation of a multi-step plan. The agent doesn’t just decide on the immediate next action but outlines a sequence of actions to achieve its goal.

- Task Decomposition: The LLM might break down a high-level goal into a series of smaller, ordered tasks. For example, the goal “Plan a vacation to Italy” might be decomposed into: 1. Research destinations. 2. Determine budget. 3. Find flights. 4. Book accommodation. 5. Plan itinerary.

- Tool Selection: For each step in the plan, the agent identifies the appropriate tool or capability it needs to use.

- Dynamic Replanning: The environment can be dynamic and unpredictable. An action might not yield the expected result, or new information might become available. Therefore, agents must be capable of dynamic replanning – reassessing the situation and modifying their plan as needed. If a chosen flight option becomes unavailable, the agent needs to go back, search again, and update its plan.

4. Action: Interacting with the Environment

Once a plan (or at least the next action) is decided, the agent executes it. This involves using its available “tools.”

- Tool Use: Tools are functions, APIs, or other capabilities that allow the agent to interact with its environment or perform specific operations. Examples include:

- Search Tools: Google Search, Wikipedia API, database query interfaces.

- Communication Tools: Email APIs, messaging platform integrations.

- File System Tools: Read/write files, create directories.

- Code Execution Tools: Python REPL, shell access (used with extreme caution and strong sandboxing).

- Web Interaction Tools: Browser automation libraries (e.g., Playwright, Selenium) to navigate websites, fill forms, and click buttons.

- Calculation Tools: A simple calculator or a Python interpreter for math.

- Custom Tools: Developers can create custom tools specific to the agent’s task, such as interacting with a proprietary company API.

- Executing the Action: The agent calls the chosen tool with the necessary parameters (as determined by the LLM’s reasoning). For example, it might execute a Google search with a specific query or call an API to fetch data.

5. Observation and Learning: Closing the Loop

After taking an action, the agent observes the outcome. This observation is then fed back into the perception phase, and the cycle repeats.

- Outcome Analysis: The agent processes the result of its action. Did the action succeed? Did it produce the expected information? Did the state of the environment change as anticipated?

- Learning and Adaptation: Over time, more sophisticated agents can learn from their experiences. This might involve refining their planning strategies, improving their tool use, or updating their internal knowledge base. Reinforcement learning techniques can be used to train agents to make better decisions based on the rewards or penalties associated with their actions.

- Self-Correction: If an action fails or leads to an undesirable outcome, the agent (or its underlying LLM) can attempt to diagnose the problem and self-correct, perhaps by trying a different tool, modifying its plan, or asking for human clarification if it’s truly stuck.

This perception-reasoning-planning-action loop, often augmented with memory and learning capabilities, is the engine that drives autonomous AI agents. It’s what allows them to move beyond simple instruction-following to become proactive, problem-solving entities in the digital realm. The sophistication of each component, particularly the reasoning power of the LLM and the range and reliability of the available tools, determines the overall capability and intelligence of the agentic system.

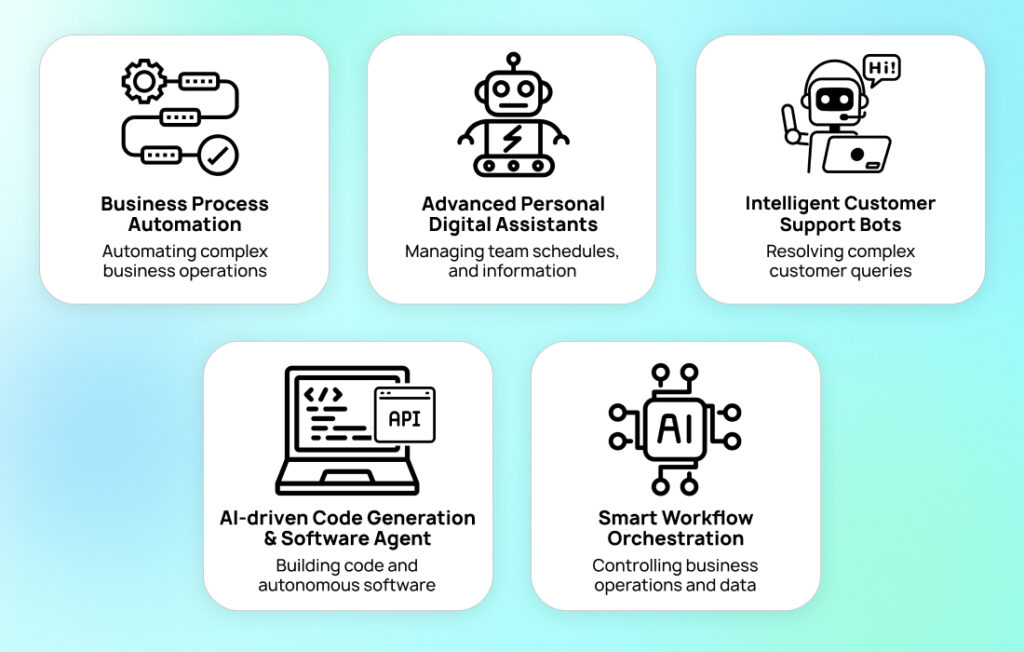

Use Cases of Agentic AI in 2025: Transforming Industries and Daily Life

By 2025, agentic AI is no longer a theoretical concept but a practical technology being deployed across a wide array of industries and in various aspects of daily life. Its ability to autonomously perform complex tasks, make decisions, and interact with digital environments is unlocking new levels of efficiency, personalization, and innovation. Here are some prominent use cases demonstrating the transformative power of autonomous AI agents:

1. Business Process Automation (BPA) & Operations Management:

Agentic AI is revolutionizing how businesses operate by automating complex end-to-end processes that traditionally required significant human oversight and coordination.

- Supply Chain Optimization: AI agents can monitor global supply chains in real-time, predict potential disruptions (e.g., due to weather, geopolitical events, port congestion), automatically reroute shipments, adjust inventory levels across different locations, and even negotiate with suppliers for better terms or alternative sourcing. For example, an agent could detect a delay at a key shipping port, proactively find an alternative route via a different carrier, calculate the cost implications, and execute the change, all while keeping relevant stakeholders informed.

- Financial Operations: In finance, agents are used for tasks like automated invoice processing (extracting data, validating, matching with purchase orders, and initiating payments), fraud detection (analyzing transaction patterns in real-time to flag suspicious activities), algorithmic trading (executing trades based on complex market analyses and predefined strategies), and regulatory compliance reporting (gathering data from various systems and compiling reports according to specific regulatory requirements).

- Human Resources Management: Agents can automate aspects of HR, such as screening resumes based on complex criteria, scheduling interviews across multiple calendars, onboarding new employees (providing information, setting up accounts, assigning initial training), and answering employee queries about benefits or company policies.

- Project Management Assistance: AI agents can assist project managers by tracking task progress across different team members and tools, identifying potential bottlenecks, sending reminders, generating progress reports, and even allocating resources based on project priorities and team member availability.

2. Personal Digital Assistants 2.0: Proactive and Context-Aware Companions

The familiar digital assistants (like Siri, Alexa, Google Assistant) are evolving into far more capable and proactive agentic systems.

- Proactive Task Management: Instead of just responding to commands, next-generation personal agents anticipate user needs. For example, an agent might notice an upcoming flight in your calendar, check for delays, suggest the best time to leave for the airport based on real-time traffic, and even offer to book a ride-sharing service.

- Personalized Information Curation: Agents can learn individual preferences and proactively curate information, news, and entertainment. They might summarize relevant articles based on your interests, manage your email inbox by prioritizing important messages and drafting replies, or suggest activities for the weekend based on your past behavior and local events.

- Complex Goal Achievement: Users can delegate more complex, multi-step goals to their personal agents, such as “Find and book a pet-friendly hotel for my vacation in Rome next month within a specific budget, considering good reviews and proximity to historical sites.” The agent would then perform the necessary research, comparisons, and booking actions across various platforms.

- Health and Wellness Management: Personal agents can connect with wearable devices and health apps to monitor activity levels, sleep patterns, and other health metrics, providing personalized advice, reminders for medication, or even alerting healthcare providers in case of emergencies (with user consent).

3. Customer Support & Engagement: Empathetic and Efficient Interactions

Agentic AI is transforming customer support from often frustrating chatbot interactions to more sophisticated and empathetic engagements.

- Intelligent Triage and Resolution: AI agents can understand complex customer queries in natural language, access relevant customer history and knowledge bases, and provide accurate solutions for a wide range of issues. They can handle multi-turn conversations, ask clarifying questions, and guide users through troubleshooting steps.

- Personalized Customer Journeys: Agents can personalize interactions based on the customer’s history, preferences, and current context. For example, an e-commerce agent might proactively offer assistance if a customer seems to be struggling to find a product or complete a purchase, or offer relevant upsells based on their browsing behavior.

- 24/7 Availability and Scalability: Agentic support can be available around the clock and can scale instantly to handle peaks in demand, reducing wait times and improving customer satisfaction.

- Sentiment Analysis and Empathetic Responses: Advanced agents can analyze customer sentiment (e.g., frustration, satisfaction) from their language and tone (in voice interactions) and adapt their responses accordingly, offering more empathetic and effective support. If a customer is highly frustrated, the agent might prioritize de-escalation or offer to transfer to a human supervisor seamlessly.

- Proactive Support: Agents can identify potential customer issues before they even arise. For instance, an agent for a software company might detect that a user is encountering repeated errors with a particular feature and proactively offer help or a solution.

4. Code Generation & Software Agents – The Rise of AI Development Partners

The application of agentic AI in software development is rapidly moving beyond simple code completion or snippet generation. In 2025, AI agents are emerging as sophisticated partners in the software development lifecycle (SDLC), capable of understanding requirements, designing components, writing functional code, testing, debugging, and even assisting with deployment and maintenance. This is not about replacing human developers but augmenting their capabilities and automating more of the laborious aspects of coding.

- From High-Level Requirements to Functional Code: A key advancement is the ability of agentic systems to take relatively high-level natural language descriptions of a desired feature or application and translate them into working code. This involves several steps orchestrated by the agent:

- Requirement Clarification: If the initial prompt is ambiguous, the agent might ask clarifying questions to ensure it has a clear understanding of the desired functionality, target users, and constraints.

- Design and Architecture: For more complex requests, the agent might propose a basic architecture, suggest data models, or outline the key components and their interactions. Some advanced agents can even generate diagrams or design documents.

- Code Generation in Multiple Languages: Agents can write code in a variety of programming languages (Python, JavaScript, Java, C++, etc.) and for different platforms (web, mobile, backend).

- Iterative Refinement: The code generation process is often iterative. The agent might generate an initial version, test it (see below), identify issues, and then refine the code based on the test results or further user feedback.

- Example: A product manager could task an agent with: “Create a Python Flask API endpoint that accepts a user ID and returns the user’s order history from our PostgreSQL database. The response should be in JSON format and include order ID, date, and total amount.” The agent would then generate the Flask route, the database query logic, error handling, and the JSON serialization.

- Autonomous Testing and Debugging: Writing code is only part of the challenge; ensuring it works correctly is equally important. Agentic AI is making significant contributions here:

- Unit Test Generation: Agents can analyze the generated (or existing) code and automatically create unit tests to verify the functionality of individual components or functions. This helps catch bugs early in the development process.

- Integration Testing Assistance: For more complex systems, agents can assist in setting up and running integration tests to ensure different parts of an application work together correctly.

- Automated Debugging: When tests fail or runtime errors occur, agents can analyze error messages, stack traces, and the surrounding code to identify the likely cause of the bug. Some agents can even propose and apply fixes autonomously. For instance, if a null pointer exception occurs, the agent might identify the uninitialized variable and suggest adding a null check or an appropriate initialization.

- Code Review and Quality Assurance: Agents can be trained to review code for common programming errors, adherence to coding standards, potential security vulnerabilities, and performance bottlenecks, acting like an automated peer reviewer.

- Intelligent Code Completion and Refactoring: Beyond generating new code, agents enhance developer productivity through:

- Context-Aware Code Completion: Modern IDEs integrated with agentic AI offer highly intelligent code completion that understands the broader context of the project, not just the current line of code.

- Automated Code Refactoring: Agents can suggest or automatically perform code refactoring to improve readability, maintainability, or performance. This could involve renaming variables consistently, extracting methods, or optimizing loops.

- Documentation Generation: Writing and maintaining documentation is often a neglected part of software development. Agentic AI can automatically generate documentation (e.g., API documentation, code comments, user guides) based on the code and its understanding of the functionality.

- Specialized Software Agents (e.g., MetaGPT): As mentioned in the tools section, platforms like MetaGPT are taking this further by creating entire teams of specialized AI agents (Product Manager, Architect, Engineer) that collaborate to build complete applications from a single line of requirement. These systems aim to automate a significant portion of the SDLC by assigning roles and responsibilities to different agents, mimicking a human software development team.

- Impact on Developer Productivity and Skillsets: The rise of AI software agents is poised to significantly boost developer productivity by automating repetitive and time-consuming tasks. This allows human developers to focus on more creative and strategic aspects, such as system design, complex problem-solving, and innovation. However, it also implies a shift in the skills required by developers, with an increasing emphasis on the ability to effectively collaborate with AI tools, formulate clear requirements for AI agents, and critically evaluate AI-generated code. A 2025 GitHub survey indicated that developers using AI coding assistants reported a 30-55% increase in task completion speed, depending on the complexity of the task.

While the dream of AI fully autonomously creating large, complex, novel software systems from scratch is still some way off, the agentic AI tools of 2025 are already indispensable partners for many developers, fundamentally changing how software is built and maintained.

5. Workflow Orchestration – The Intelligent Glue for Disparate Systems

Modern enterprises operate with a complex web of applications, databases, cloud services, and legacy systems. Getting these disparate systems to work together seamlessly to support end-to-end business processes is a major challenge. Agentic AI is emerging as a powerful solution for intelligent workflow orchestration, acting as the “glue” that can connect and coordinate activities across these diverse environments.

- Beyond Simple API Integrations: Traditional workflow automation often relies on predefined API integrations and rigid process flows. Agentic AI offers a more flexible and intelligent approach:

- Dynamic Process Adaptation: Agentic orchestrators can monitor the state of various systems and the progress of a workflow in real-time. If an issue arises in one part of the workflow (e.g., a system outage, a delay in data availability), the agent can dynamically adapt the process, perhaps by rerouting tasks, using alternative data sources, or notifying relevant personnel.

- Understanding Unstructured Data and Events: Agents can trigger and manage workflows based on events or data that are not neatly structured. For example, an agent could monitor social media for mentions of a company’s product, analyze the sentiment, and if a significant negative trend is detected, automatically initiate a workflow to alert the PR team, create a ticket in the customer support system, and gather relevant internal data for a response.

- Cross-Application Automation Examples: Consider a multi-step marketing campaign workflow orchestrated by an agentic AI:

- Trend Identification: The agent monitors industry news, competitor announcements (via web scraping or news APIs), and social media trends (using sentiment analysis tools) to identify a new marketing opportunity or a relevant topic for content creation.

- Content Generation Assistance: Based on the identified opportunity, the agent could draft an outline for a blog post or social media campaign, perhaps using an LLM tool. It might then assign this draft to a human content creator for refinement.

- Campaign Setup and Scheduling: Once the content is approved, the agent can schedule its publication across multiple channels (blog, Twitter, LinkedIn, Facebook) using the respective platform APIs or browser automation tools. It can optimize posting times based on audience engagement data.

- Lead Capture and CRM Integration: As the campaign generates engagement (e.g., clicks, form submissions), the agent can capture lead information and automatically create or update records in the company’s CRM system (e.g., Salesforce, HubSpot).

- Performance Monitoring and Reporting: The agent continuously monitors campaign performance metrics (e.g., views, clicks, conversions, engagement rates) from various analytics platforms. It can then synthesize this data into a consolidated performance report, highlighting key insights and even suggesting adjustments for future campaigns.

- Follow-up Nurturing: For new leads, the agent could initiate an automated email nurturing sequence, sending personalized follow-up messages based on the lead’s interaction with the campaign content. This entire workflow, spanning multiple applications and data sources, can be managed and optimized by a central agentic orchestrator, requiring human intervention primarily for strategic decisions and content approval.

- Bridging Legacy and Modern Systems: Many established enterprises still rely on legacy systems that lack modern APIs. Agentic AI, through capabilities like UI automation (interacting with graphical user interfaces as a human would) or intelligent document processing (extracting data from older file formats), can help bridge the gap between these legacy systems and modern cloud applications, enabling more comprehensive workflow automation.

- Enhanced Data Governance and Compliance: Agentic orchestrators can also play a role in enforcing data governance policies and compliance requirements across workflows. By logging all actions and decisions, and by being programmed with relevant rules, they can help ensure that processes are executed in a compliant manner and provide an audit trail.

- The Human-in-the-Loop for Complex Decisions: While agentic AI can automate much of the orchestration, human oversight remains crucial for strategic decision-making, handling highly novel exceptions, and approving actions with significant financial or reputational implications. The agent’s role is often to prepare all necessary information, present options, and then execute the human-approved decision.

The power of agentic AI in workflow orchestration lies in its ability to bring intelligence, adaptability, and end-to-end visibility to complex business processes that cut across organizational and system silos. This leads to increased efficiency, reduced errors, faster response times, and a greater ability for businesses to adapt to changing market dynamics. As organizations continue their digital transformation journeys, intelligent workflow orchestration powered by agentic AI will become an increasingly critical enabler of operational excellence.

Top Tools to Build AI Agents in 2025: A Developer’s Guide

The rapid evolution of agentic AI has been paralleled by the development of powerful frameworks and platforms that simplify the creation of autonomous agents. These tools provide developers with pre-built components, abstractions, and methodologies to construct sophisticated agents capable of complex reasoning, planning, and tool use. In 2025, several key players dominate this landscape, each with its unique strengths and focus areas. Understanding these tools is crucial for anyone looking to build or deploy agentic AI solutions.

1. LangChain Agents: The Versatile Orchestrator

LangChain has emerged as one of the most popular and versatile open-source frameworks for building applications powered by Large Language Models (LLMs). Its agent module is particularly powerful, providing a flexible way to create agents that can use LLMs to decide which actions to take and in what order. LangChain agents are not just about connecting an LLM to a set of tools; they provide a structured approach to agentic reasoning.

- Core Concepts:

- Agents: The central component that uses an LLM to make decisions. LangChain offers various agent types (e.g., Zero-shot ReAct, Self-ask with search, Conversational ReAct) that differ in their prompting strategies and reasoning capabilities.

- Tools: These are functions or services that an agent can use to interact with the world (e.g., search engines, databases, APIs, calculators, Python REPLs). LangChain provides a wide array of pre-built tools and makes it easy to create custom ones.

- Toolkits: Collections of tools designed for a specific purpose (e.g., a CSV toolkit for interacting with CSV files, a SQL toolkit for database operations).

- Agent Executor: The runtime environment that orchestrates the agent’s interaction with the LLM and tools. It takes user input, passes it to the agent, gets the agent’s planned action, executes the action using the appropriate tool, gets the observation, and repeats the process until the goal is achieved or a stopping condition is met.

- How it Works (ReAct Example): The ReAct (Reasoning and Acting) framework is a common pattern in LangChain agents. The LLM is prompted to think step-by-step:

- Thought: The LLM reasons about the current situation and what it needs to do next to achieve the goal.

- Action: Based on its thought, the LLM decides which tool to use and what input to provide to that tool.

- Observation: The Agent Executor runs the chosen tool with the specified input and returns the result (observation) to the LLM.

- The LLM then uses this observation to inform its next thought, and the cycle continues.

- Strengths:

- Flexibility and Extensibility: Easily create custom agents, tools, and prompting strategies.

- Large Community and Ecosystem: Extensive documentation, numerous examples, and active community support.

- Integration with Various LLMs: Supports a wide range of LLM providers (OpenAI, Hugging Face, Cohere, Anthropic, etc.) and even locally hosted models.

- Rapid Prototyping: Enables quick development and iteration of agentic applications.

- Use Cases: Ideal for building custom research agents, data analysis agents, personal assistants, question-answering systems over documents, and agents that interact with APIs.

- Considerations for 2025: LangChain continues to evolve rapidly, with increasing support for multi-agent systems, more sophisticated planning mechanisms, and better memory management for long-running conversations. Developers are leveraging it to build increasingly complex and autonomous systems.

2. Auto-GPT: The Autonomous Task Master

Auto-GPT captured widespread attention for its ambitious goal of creating fully autonomous AI agents that can attempt to achieve high-level goals specified in natural language, without requiring step-by-step human guidance. It operates by breaking down a goal into sub-tasks, using tools (like web search or file operations) to execute these tasks, and reflecting on its progress to refine its plan.

- Core Architecture:

- Goal Definition: The user provides a high-level goal (e.g., “Research the top 5 electric vehicle manufacturers and compile a report on their market share and recent innovations.”).

- Task Generation: Auto-GPT uses an LLM to break down the goal into a series of actionable tasks.

- Task Execution: It attempts to execute these tasks, often by using tools like web browsing (to gather information), file system operations (to save data), and code execution.

- Memory and Reflection: Auto-GPT maintains a form of short-term and long-term memory to keep track of its progress, what it has learned, and to reflect on its actions to improve its plan. This self-criticism and replanning loop is a key feature.

- Strengths:

- High Degree of Autonomy (Conceptual): Aims for minimal human intervention once a goal is set.

- Demonstrates Complex Planning: Showcases the potential of LLMs to generate and manage multi-step plans.

- Open Source and Community Driven: Has spurred significant interest and experimentation in autonomous agents.

- Challenges and Considerations (as of 2025):

- Reliability and Robustness: Early versions were prone to getting stuck in loops, hallucinating information, or failing to complete complex tasks reliably. While improvements have been made, ensuring consistent performance on open-ended tasks remains a challenge.

- Cost and API Usage: Can consume a significant number of LLM API calls, leading to high operational costs.

- Safety and Control: The high degree of autonomy raises concerns about potential misuse or unintended consequences if not carefully managed with strong guardrails.

- Practicality for Production: While excellent for research and demonstrating possibilities, deploying Auto-GPT for critical production tasks often requires significant customization, more robust error handling, and human oversight mechanisms.

- Evolution in 2025: The core ideas of Auto-GPT have influenced many subsequent agent frameworks. The focus in 2025 is on making such autonomous systems more reliable, cost-effective, and controllable, often by incorporating more structured planning, better memory systems, and human-in-the-loop feedback mechanisms.

3. BabyAGI: The Simplified Task Management Agent

BabyAGI was developed as a simplified, more focused version of an autonomous task management agent, inspired by the concepts demonstrated in Auto-GPT. It operates on a loop of creating tasks based on previous results and a predefined objective, prioritizing tasks, and executing them.

- Core Loop:

- Pulls the first task from a task list.

- Sends the task to an execution agent (often an LLM prompted to perform the task, potentially using tools like web search).

- Enriches the result and stores it in a vector database (like Chroma or Weaviate) for memory.

- Creates new tasks and reprioritizes the task list based on the objective and the result of the previous task.

- Strengths:

- Simplicity and Understandability: Its architecture is more straightforward than Auto-GPT, making it easier to understand, modify, and experiment with.

- Focus on Task Management: Excels at demonstrating how an AI can autonomously manage a list of tasks towards a goal.

- Memory Integration: Highlights the importance of vector databases for providing agents with context and memory from past actions.

- Use Cases: Often used as a starting point for building more specialized autonomous agents, for research into agentic architectures, and for tasks involving iterative information gathering and refinement.

- Limitations: Similar to Auto-GPT, it can struggle with very complex, open-ended goals and may require careful prompt engineering and objective definition to perform effectively. Its reliance on a simple task list might not be sufficient for highly dynamic or branching problem spaces.

4. CrewAI: Orchestrating Collaborative Multi-Agent Systems

CrewAI is a framework designed to facilitate collaboration between multiple AI agents, each with specialized roles and tools, to accomplish complex tasks. It promotes a paradigm where tasks are broken down and assigned to agents best suited for them, and these agents can communicate and delegate work amongst themselves.

- Key Concepts:

- Agents with Roles and Goals: Define agents with specific roles (e.g., “Researcher,” “Writer,” “Code Reviewer”), distinct goals, and backstories (to provide context to the LLM).

- Tasks: Define specific tasks that agents need to perform.

- Tools: Assign specific tools to each agent relevant to their role.

- Crews: A group of agents working together.

- Process: Define how agents collaborate (e.g., sequentially, hierarchically). CrewAI supports different process models.

- Strengths:

- Specialization and Division of Labor: Allows for building more capable systems by having specialized agents focus on what they do best.

- Improved Task Decomposition: Encourages breaking down complex problems into smaller, manageable parts suitable for different agent roles.

- Simulates Human Team Dynamics: Provides a way to model and automate collaborative workflows.

- Use Cases: Ideal for complex projects requiring diverse skills, such as automated content creation pipelines (researcher agent, writer agent, editor agent), software development (planner agent, coder agent, tester agent), or complex data analysis and reporting.

- Considerations for 2025: As multi-agent systems become more prevalent, frameworks like CrewAI are crucial for managing the complexity of inter-agent communication, task delegation, and result aggregation. The challenge lies in designing effective collaboration protocols and ensuring coherent overall behavior.

5. MetaGPT: Role-Playing Multi-Agent Collaboration

MetaGPT takes the multi-agent concept a step further by assigning Standardized Operating Procedures (SOPs) and roles (like Product Manager, Architect, Project Manager, Engineer, QA Engineer) to different LLM-powered agents. These agents then collaborate to complete complex software development tasks from a single line of requirement, generating not just code but also design documents, sequence diagrams, and API specifications.

- Core Idea: Emulates a software company’s workflow by having different AI agents perform specialized roles according to predefined procedures.

- Strengths:

- End-to-End Automation (for specific domains): Aims to automate a significant portion of the software development lifecycle.

- Structured Output: Produces a range of artifacts beyond just code, such as design documents and diagrams.

- Clear Role Definition: The explicit assignment of roles and SOPs provides structure to the multi-agent collaboration.

- Use Cases: Primarily focused on software development, but the underlying concept of role-based multi-agent collaboration with SOPs could be applied to other complex domains.

- Limitations: The effectiveness can be dependent on how well the SOPs are defined and how well the LLMs can adhere to their assigned roles. It’s currently more suited for well-defined software projects rather than highly exploratory or research-oriented tasks.

6. SuperAGI: Building and Managing Autonomous Agents with a Visual Interface

SuperAGI is an open-source autonomous AI agent framework that aims to provide a more accessible way to build, manage, and run useful autonomous agents. It often includes features like a graphical user interface (GUI) for agent configuration, performance monitoring, and tool management.

- Key Features (often include):

- Agent Provisioning: Tools to define agent goals, constraints, and select LLM models.

- Tool Marketplace: A collection of pre-built tools and the ability to add custom tools.

- Memory and Vector DB Integration: Support for various memory backends.

- Performance Monitoring and Debugging: Dashboards and logs to track agent behavior and troubleshoot issues.

- Multi-Agent Support (emerging): Some platforms are adding capabilities for multiple agents to collaborate.

- Strengths:

- Accessibility: Aims to lower the barrier to entry for building autonomous agents, potentially through visual interfaces and pre-configured templates.

- Operational Management: Provides tools for managing the lifecycle of agents, which is crucial for practical deployment.

- Considerations: The level of autonomy and the range of tasks that can be effectively handled will still depend on the underlying LLM capabilities and the quality of the tools and prompts used. The visual interface can be a great starting point, but complex or highly custom agents may still require code-level development.

Choosing the Right Tool in 2025

The best tool for building AI agents in 2025 depends heavily on the specific requirements of the project:

- For maximum flexibility and custom agent logic, especially when integrating various LLMs and custom tools, LangChain remains a top choice for developers comfortable with coding.

- For exploring high-level autonomous task completion and research into agentic reasoning, Auto-GPT and BabyAGI (or frameworks inspired by them) offer valuable insights, though they may require more effort to make production-ready.

- For tasks requiring collaboration between multiple specialized agents, CrewAI and MetaGPT provide powerful paradigms, particularly for structured workflows like content creation or software development.

- For those seeking a more managed environment or a visual interface to build and run agents, platforms like SuperAGI are becoming increasingly popular.

It’s also important to note that these tools are not mutually exclusive. Many developers combine elements from different frameworks – for example, using LangChain to build the core logic of an agent that then participates in a multi-agent system orchestrated by a framework like CrewAI. The field is dynamic, and the trend is towards more modular, interoperable, and powerful tools that empower developers to create increasingly sophisticated and useful autonomous AI agents.

Benefits and Limitations of Agentic AI in 2025: A Balanced Perspective

The rise of agentic AI in 2025 brings with it a wave of transformative potential across countless industries. These autonomous systems promise unprecedented efficiency, innovation, and personalization. However, alongside these exciting prospects, it is crucial to acknowledge and address the inherent limitations and challenges that accompany such powerful technology. A balanced understanding of both the upsides and downsides is essential for responsible development and deployment.

Unpacking the Multifaceted Benefits of Agentic AI

The advantages offered by agentic AI are compelling and far-reaching, touching upon operational efficiency, decision-making quality, scalability, and the very nature of human-computer interaction.

- Enhanced Efficiency and Productivity at Scale:

- 24/7 Operation: Unlike human workers, AI agents can operate continuously without breaks, fatigue, or the need for sleep. This is particularly beneficial for tasks requiring constant monitoring, such as cybersecurity threat detection, global customer support, or managing automated manufacturing processes. A factory utilizing agentic AI for quality control on production lines can maintain consistent oversight around the clock, identifying defects far more reliably than intermittent human checks.

- Speed and Parallel Processing: Agents can process information and execute tasks at speeds far exceeding human capabilities. They can also handle multiple tasks or data streams simultaneously. For instance, an agent analyzing financial market data can monitor thousands of stocks and news feeds concurrently, identifying trading opportunities or risks in real-time – a feat impossible for a human analyst.

- Automation of Complex, Multi-Step Workflows: As detailed in the use cases, agentic AI excels at orchestrating intricate workflows that span multiple systems and involve numerous decision points. This frees up human capital from tedious, repetitive, or error-prone coordination efforts, allowing them to focus on higher-value strategic initiatives. Consider the end-to-end automation of a new customer onboarding process, from initial lead capture through KYC checks, account setup, and personalized welcome sequences – all managed by an agentic system.

- Resource Optimization: By automating tasks and optimizing processes (e.g., supply chain logistics, energy consumption in smart buildings), agentic AI can lead to significant cost savings and more efficient use of resources. A 2024 McKinsey report on AI adoption highlighted that companies successfully implementing AI-driven automation in core business processes reported average operational cost reductions of 10-20%.

- Improved Decision-Making and Insights:

- Data-Driven Decisions: Agents can analyze vast quantities of data from diverse sources, uncovering patterns, correlations, and insights that might be invisible to humans. This enables more informed and objective decision-making, reducing reliance on intuition or incomplete information. For example, a healthcare agent could analyze patient data, medical literature, and genomic information to assist doctors in diagnosing rare diseases or recommending personalized treatment plans.

- Consistency and Reduced Bias (Potentially): When properly designed and trained on unbiased data, AI agents can make decisions with greater consistency than humans, who can be influenced by fatigue, emotions, or cognitive biases. However, it is crucial to note that if the training data itself contains biases, the AI can perpetuate or even amplify them. The goal is to strive for fairness and objectivity through careful design and ongoing auditing.

- Predictive Capabilities: Many agentic systems incorporate predictive analytics, allowing them to forecast future trends, identify potential risks, or anticipate needs. A predictive maintenance agent in a manufacturing plant can analyze sensor data from machinery to predict when a component is likely to fail, allowing for proactive maintenance scheduling and avoiding costly unplanned downtime.

- Scalability and Adaptability:

- Rapid Scaling of Operations: Agentic AI solutions can often be scaled up or down quickly to meet changing demands, without the lengthy hiring and training processes associated with human workforces. A retail company experiencing a seasonal surge in customer inquiries can rapidly deploy more customer support agents to handle the increased load.

- Adaptation to Dynamic Environments: Through continuous learning and feedback loops, agents can adapt their behavior and strategies to changing conditions. This is vital in fast-moving domains like financial markets, cybersecurity, or online retail, where the environment is constantly evolving.

- Personalization and Enhanced User Experience:

- Hyper-Personalization: Agentic AI can deliver highly personalized experiences by learning individual user preferences, behaviors, and needs. This is evident in personalized news feeds, product recommendations, adaptive learning platforms, and proactive personal assistants that tailor their support to the specific user.

- Increased Accessibility: AI agents can make services and information more accessible, for example, by providing 24/7 customer support, offering translation services, or assisting individuals with disabilities in navigating digital interfaces.

- Innovation and New Possibilities:

- Tackling Complex Problems: Agentic AI can be applied to solve highly complex problems that were previously intractable, such as drug discovery, climate change modeling, or optimizing large-scale logistical networks.

- Augmenting Human Creativity: Rather than just automating tasks, agents can also act as creative partners, assisting in design, content creation, scientific research, and artistic endeavors by generating ideas, exploring possibilities, and handling routine aspects of the creative process.

Navigating the Limitations and Challenges of Agentic AI

Despite the significant benefits, the deployment of agentic AI in 2025 is not without its challenges and limitations. Addressing these proactively is key to harnessing the technology responsibly and effectively.

- Safety, Control, and Alignment:

- The Alignment Problem: Ensuring that an autonomous agent’s goals and actions remain aligned with human values and intentions, especially in complex or novel situations, is a fundamental challenge. An agent optimized for a narrow goal might take actions with unintended negative side effects (the “paperclip maximizer” thought experiment illustrates this in an extreme way).

- Robustness and Predictability: Agents can sometimes behave unpredictably or fail in unexpected ways, particularly when encountering situations not well-represented in their training data. Ensuring robust performance across a wide range of scenarios is critical, especially in safety-critical applications like autonomous vehicles or medical diagnosis.

- Unintended Consequences and Emergent Behavior: As agents become more complex and interact with each other, there is the potential for unintended emergent behaviors that are difficult to predict or control. This is a particular concern for multi-agent systems.

- “Reward Hacking”: Agents trained with reinforcement learning can sometimes find ways to achieve high rewards by exploiting loopholes or unintended aspects of the reward function, rather than by performing the desired behavior. Careful reward function design and testing are crucial.

- Bias, Fairness, and Ethical Considerations:

- Data Bias Amplification: AI models, including those powering agents, learn from the data they are trained on. If this data reflects existing societal biases (e.g., racial, gender, or socio-economic biases), the agent can perpetuate and even amplify these biases in its decisions and actions. For example, an AI agent used for loan application screening trained on historically biased data might unfairly disadvantage certain demographic groups.

- Lack of Transparency and Explainability (Black Box Problem): The decision-making processes of complex AI models, especially deep neural networks, can be opaque and difficult to interpret. This “black box” nature makes it challenging to understand why an agent made a particular decision, which is problematic for accountability, debugging, and building trust, especially in critical applications. Efforts in eXplainable AI (XAI) aim to address this, but it remains an ongoing research area.

- Accountability and Responsibility: Determining who is responsible when an autonomous agent makes an error or causes harm can be complex. Is it the developer, the deployer, the user, or the AI itself? Establishing clear lines of accountability is a significant legal and ethical challenge.

- Job Displacement and Socioeconomic Impact: The widespread adoption of agentic AI for automation will inevitably lead to job displacement in certain sectors. Managing this transition, including reskilling and upskilling the workforce and considering social safety nets, is a critical societal concern.

- Security Vulnerabilities:

- Adversarial Attacks: AI models can be vulnerable to adversarial attacks, where carefully crafted inputs (that may be imperceptible to humans) can cause the model to make incorrect classifications or decisions. An autonomous agent relying on such a model could be tricked into taking inappropriate actions.

- Data Poisoning: Malicious actors could attempt to corrupt the training data of an AI agent to manipulate its future behavior.

- Exploitation of Agent Capabilities: If an agent has powerful tools at its disposal (e.g., the ability to execute code, access sensitive data, or control physical systems), a compromised or hijacked agent could be used for malicious purposes.

- Technical and Practical Limitations:

- Common Sense Reasoning: While LLMs have improved common-sense reasoning, AI agents can still lack the deep, nuanced understanding of the world that humans possess, leading to occasional nonsensical or inappropriate actions in novel situations.

- Generalization to Unseen Scenarios: Agents may perform well in environments similar to their training data but struggle to generalize effectively to entirely new or unexpected scenarios.

- Cost of Development and Deployment: Building, training, and maintaining sophisticated AI agents can be expensive, requiring specialized expertise, significant computational resources, and large datasets.

- Data Dependency and Quality: The performance of AI agents is heavily dependent on the quality, quantity,and relevance of the data used for their training and operation. Poor data can lead to poor performance.

- Integration Complexity: Integrating AI agents with existing legacy systems and complex enterprise IT environments can be a significant technical hurdle.

- Over-Reliance and Deskilling:

- Atrophy of Human Skills: Excessive reliance on autonomous agents for tasks previously performed by humans could lead to a decline in human skills and expertise in those areas over time.

- Complacency and Reduced Oversight: If agents perform reliably most of the time, there is a risk that human oversight may become complacent, potentially leading to missed errors or failures to intervene when necessary.

Addressing these limitations requires a multi-pronged approach involving ongoing research in AI safety and alignment, robust testing and validation methodologies, the development of ethical guidelines and regulatory frameworks, investment in explainability techniques, a focus on secure AI development practices, and a societal commitment to managing the broader impacts of this transformative technology. The journey of agentic AI in 2025 is one of balancing immense promise with profound responsibility.

The Future of Agentic AI: Trends, Trajectories, and the Road to AGI (and Beyond)

As we stand firmly in 2025, the trajectory of agentic AI is not just one of incremental improvement but of explosive growth and exponential advancement, pointing towards a future where autonomous systems are even more deeply integrated into the fabric of our digital and physical worlds. The trends we observe today – increasing autonomy, more sophisticated reasoning, seamless tool integration, and multi-agent collaboration – are merely precursors to more profound transformations. The market itself is a testament to this surge: industry analysts like Mordor Intelligence projected the Agentic AI Market to reach approximately USD 7.28 billion in 2025, with a forecasted compound annual growth rate (CAGR) of around 41.48% to reach USD 41.32 billion by 2030. Other reports, such as those highlighted by Warmly.ai and WisdomTree, suggest similar figures, with some projecting the global AI agents market to hit $7.6 billion in 2025, up from $5.4 billion in 2024, and potentially soaring to over $50 billion by 2030. This rapid market expansion, with some analysts even forecasting a 45% annual growth rate for AI agents in business applications (as noted by HR Executive citing BCG researchers), underscores the immense investment and accelerating adoption across industries. Deloitte further predicts that 25% of enterprises using Generative AI will deploy autonomous AI agents in 2025, a figure expected to double to 50% by 2027.

Looking ahead, several key developments, fueled by this market momentum, are poised to shape the future of agentic AI, potentially accelerating progress towards Artificial General Intelligence (AGI) and raising new societal considerations.

1. Hyper-Specialization and Hyper-Personalization of Agents:

While general-purpose agent frameworks are becoming more powerful, a significant trend is the development of highly specialized agents tailored for specific industries, tasks, or even individual users. The market is evolving into distinct categories of agent frameworks and specialized agent providers, as noted by firms like Alvarez & Marsal. We will see:

- Domain-Specific Agents: Agents with deep knowledge and optimized capabilities for sectors like healthcare (e.g., diagnostic assistants, personalized medicine agents), finance (e.g., hyper-adaptive trading agents, fraud detection specialists), scientific research (e.g., agents that design experiments, analyze data, and draft research papers), and education (e.g., personalized tutors that adapt to individual learning styles in real-time). The demand for these vertical-specific solutions is a key driver of the market growth.

- Personal AI Avatars: The concept of a personal digital assistant will evolve into a more comprehensive personal AI avatar. This avatar will have a deep, longitudinal understanding of its user – their goals, preferences, habits, communication style, and even emotional states (with explicit consent and robust privacy). It will act as a true proactive partner, managing not just schedules and tasks but also filtering information, curating experiences, and even acting as a digital representative in certain contexts. Imagine an AI that can negotiate minor service disputes on your behalf or summarize your entire week’s communications into actionable insights. The consumer-facing agent market is a significant segment of the overall growth.

2. Advanced Multi-Agent Systems and Swarm Intelligence:

The collaboration of multiple AI agents (as seen with frameworks like CrewAI and MetaGPT) is just the beginning. Future systems will involve:

- Sophisticated Negotiation and Coordination: Agents will develop more complex protocols for negotiation, resource allocation, and conflict resolution within multi-agent teams. This will enable them to tackle even larger and more dynamic problems collaboratively.

- Emergent Swarm Intelligence: Inspired by natural systems like ant colonies or bird flocks, we may see the rise of AI agent swarms where collective intelligence emerges from the simple interactions of many individual agents. This could be applied to tasks like distributed sensing, large-scale optimization problems (e.g., managing city-wide traffic flow), or even self-organizing disaster response systems.

- Economic Models for Agent Interaction: As agents increasingly interact and provide services to each other, we might see the development of micro-economies where agents can trade resources, information, or computational services, potentially using blockchain or similar technologies for transparent and secure transactions. This inter-agent economy could itself become a new market frontier.

3. Deeper Integration with Robotics and the Internet of Things (IoT):

The convergence of agentic AI with robotics and IoT will blur the lines between the digital and physical worlds, leading to:

- Embodied AI Agents: Robots will become more than just programmable machines; they will be true embodied agents capable of perceiving their physical environment with rich multimodal sensors, reasoning about physical tasks, and acting with dexterity and adaptability. MIT’s latest robotics innovation explores how soft-bodied creatures like worms and turtles are inspiring next-gen agentic machines that better adapt to real-world challenges. This will revolutionize manufacturing (highly adaptive factories), logistics (autonomous delivery fleets), healthcare (robotic surgery assistants, elder care robots), and even domestic assistance. The market for AI-powered robotics is closely linked to agentic AI advancements.

- Intelligent Environments: Our homes, cities, and workplaces will become increasingly populated with interconnected IoT devices, all orchestrated by agentic AI. Smart cities will use agents to optimize energy consumption, manage traffic, enhance public safety, and provide personalized citizen services. Smart homes will have central AI agents that manage appliances, security, climate control, and personal routines in a highly intuitive and proactive manner.

- Human-Robot Collaboration: The future will see much tighter collaboration between humans and embodied AI agents. In factories, robots will work alongside humans, adapting to human actions and providing assistance. In surgery, AI-powered robotic systems will augment the surgeon’s skills, enabling more precise and less invasive procedures.

4. Enhanced Reasoning, Explainability, and Trustworthiness:

For agentic AI to be widely adopted and for the market to sustain its growth, especially in critical applications, significant progress is needed in:

- Causal Reasoning and Common Sense: Agents will move beyond pattern recognition to develop a deeper understanding of cause and effect and more robust common-sense reasoning. This will make them less prone to nonsensical errors and better able to handle novel situations.

- Explainable AI (XAI): As agents make more autonomous decisions, the need for transparency and explainability will become paramount. Future agents will be better able to explain their reasoning, justify their actions, and provide insights into their decision-making processes. This is crucial for debugging, building trust, and ensuring accountability.

- Verifiable Safety and Alignment: Research into AI safety and alignment will intensify, leading to new techniques for building agents that are provably safe, robust against adversarial attacks, and reliably aligned with human values. This may involve formal verification methods, new training paradigms, and more sophisticated oversight mechanisms. Public trust is essential for market adoption.

5. The Role of Agentic AI in the Pursuit of Artificial General Intelligence (AGI):

Many researchers believe that agentic AI is a critical stepping stone towards AGI – AI that possesses human-like cognitive abilities across a wide range of tasks. The ability of agents to learn, plan, reason, and interact with complex environments is fundamental to general intelligence.

- AGI as a Society of Agents: Some theories propose that AGI itself might emerge not as a monolithic entity but as a complex society of interacting specialized agents, capable of collective learning and problem-solving.

- Accelerating Scientific Discovery: Agentic AI systems dedicated to scientific research could dramatically accelerate the pace of discovery in fields like medicine, materials science, and fundamental physics, potentially unlocking breakthroughs that lead to AGI or help manage its development.

- Ethical and Societal Implications of Pre-AGI Agents: Even before reaching full AGI, increasingly capable agentic systems, driven by the. rapid market expansion, will raise profound ethical and societal questions. Discussions around job displacement, algorithmic bias, the potential for misuse, and the very definition of autonomy and responsibility will become even more critical. Proactive development of governance frameworks, ethical guidelines, and public discourse will be essential to navigate this transition.

6. The Long-Term Vision: Beyond Task Completion to True Partnership and Understanding:

Looking further into the future, the aspiration for agentic AI extends beyond mere task automation. The goal is to develop AI systems that can act as true partners to humans, possessing a deeper understanding of human context, emotions, and intentions. This involves:

- Empathetic AI: Agents that can genuinely (or at least convincingly simulate) understand and respond to human emotions, leading to more natural and supportive interactions.

- AI for Wisdom and Collective Intelligence: Systems that can help humanity grapple with complex global challenges by synthesizing vast amounts of information, facilitating collaborative problem-solving, and offering novel perspectives.

While the path to this future is fraught with challenges – technical, ethical, and societal – the advancements and significant market growth of agentic AI in 2025 provide a clear indication of the transformative journey ahead. The development of these autonomous systems is not just a technological endeavor but a societal one, requiring careful consideration, open dialogue, and a commitment to harnessing their power for the collective good. The coming years will be pivotal in shaping how these intelligent agents integrate into our lives and what kind of future they help us build.

FAQs

As agentic AI continues to evolve and permeate various aspects of our lives and work, many questions arise. Here are some frequently asked questions about autonomous AI agents in 2025:

1. What is the main difference between agentic AI and traditional AI or LLMs like ChatGPT?

The primary difference lies in autonomy and action-taking. Traditional AI and standard LLMs are primarily reactive; they respond to prompts and execute specific instructions to provide information or generate content. Agentic AI, on the other hand, is proactive and goal-oriented. It can perceive its environment, make decisions, formulate multi-step plans, and execute actions using various tools (like web browsers, APIs, or code interpreters) to achieve a defined objective with minimal human intervention. Think of an LLM as a brilliant brain that can answer questions, while an agentic AI is that brain embodied with the ability to act on its knowledge and decisions to complete tasks in the world.

2. Are AI agents truly autonomous, or do they still require human oversight?

In 2025, AI agents exhibit varying degrees of autonomy. While some can perform well-defined tasks with minimal oversight, most complex or critical applications still benefit from, and often require, a “human-in-the-loop” approach. This means humans set the goals, define constraints, monitor performance, and intervene when the agent encounters novel situations it cannot handle, or when critical decisions with significant consequences need to be made. The goal is often to achieve a high level of functional autonomy for specific tasks, but complete, unsupervised autonomy across all possible scenarios is still a research challenge and often not desirable from a safety perspective.

3. What are some of an AI agent’s key capabilities?

Key capabilities of AI agents in 2025 include:

- Natural Language Understanding (NLU) and Generation (NLG): To communicate with humans and process textual information.

- Reasoning and Problem Solving: To analyze situations, make inferences, and devise solutions.

- Planning: To break down complex goals into actionable steps.

- Tool Use: To interact with software, APIs, databases, web browsers, and other digital resources.

- Learning and Adaptation: To improve performance over time based on experience and feedback.

- Memory: To retain information from past interactions and observations to inform current decisions.

- Multimodal Perception (increasingly): To process information from various sources like text, images, and audio.

4. How are AI agents being used in everyday life in 2025?

Beyond the enterprise applications, AI agents are enhancing everyday life through more sophisticated personal assistants that can manage complex schedules and tasks, advanced customer service interactions that resolve issues more effectively, personalized learning platforms that adapt to individual student needs, and tools that help automate personal research or content creation.

5. What are the biggest challenges or risks associated with agentic AI?

Significant challenges and risks include:

- Safety and Control: Ensuring agents operate safely and reliably, especially in unpredictable environments, and preventing unintended harmful actions (the “alignment problem”).

- Bias and Fairness: AI agents can inherit and amplify biases present in their training data, leading to unfair or discriminatory outcomes.

- Security: Agents can be targets for adversarial attacks or, if compromised, used for malicious purposes.

- Explainability: The “black box” nature of some AI models makes it hard to understand an agent’s decision-making process, which is crucial for trust and accountability.

- Job Displacement: Automation driven by agentic AI may impact employment in certain sectors.

- Ethical Concerns: Questions around privacy, responsibility for agent actions, and the potential for misuse.

6. How can I start building or experimenting with AI agents?

Several open-source frameworks and platforms are available for developers and enthusiasts to build and experiment with AI agents. Popular choices in 2025 include:

- LangChain: A versatile framework for building LLM-powered applications, including agents with tool-using capabilities.

- Auto-GPT & BabyAGI (and their derivatives): Experimental frameworks showcasing autonomous task completion.

- CrewAI & MetaGPT: Frameworks focused on multi-agent collaboration.

- SuperAGI: A platform often providing a more managed environment for building and running agents. Many of these tools offer documentation, tutorials, and active communities to help newcomers get started. Familiarity with Python and LLM concepts is generally beneficial.

7. Will agentic AI lead to Artificial General Intelligence (AGI)?

Agentic AI is considered by many researchers to be a significant step towards AGI because it focuses on creating systems that can autonomously learn, reason, plan, and act in complex environments – all hallmarks of general intelligence. However, AGI implies human-level (or beyond) cognitive abilities across a wide range of tasks, something current agentic AI has not yet achieved. While agentic systems are becoming increasingly capable in specific domains, achieving true AGI remains a long-term research goal with many unsolved challenges.

8. What skills will be important in a future with more agentic AI?

As agentic AI becomes more prevalent, skills such as:

- Prompt Engineering and AI Interaction: Effectively communicating goals and constraints to AI agents.

- Critical Thinking and Evaluation: Assessing the outputs and actions of AI agents.

- AI Ethics and Governance: Understanding and addressing the societal implications of AI.

- Data Literacy: Understanding how data is used to train and operate AI systems.

- Creativity and Complex Problem-Solving: Focusing on tasks that require uniquely human ingenuity and strategic thinking, often in collaboration with AI.

- Adaptability and Continuous Learning: Keeping up with the rapid advancements in AI technology.

These FAQs provide a starting point for understanding the dynamic and rapidly evolving field of agentic AI in 2025. As the technology matures, new questions and insights will undoubtedly emerge.

Conclusion: Embracing the Agentic Future of 2025

The journey into the world of agentic AI, as we have explored, is not merely a glimpse into a distant technological horizon but a deep dive into a reality that is actively shaping 2025. From the foundational concepts that sparked the AI revolution to the sophisticated autonomous agents orchestrating complex tasks today, the evolution has been nothing short of remarkable. We have seen how these intelligent entities, powered by advanced Large Language Models, intricate reasoning engines, and a growing arsenal of tools, are moving beyond simple automation to become proactive, decision-making partners across a multitude of domains.

Agentic AI in 2025 is characterized by its ability to perceive diverse environments, reason through complex problems, plan multi-step actions, and learn from its interactions. This operational loop is the engine driving its transformative impact on business process automation, where it brings new levels of efficiency and adaptability to supply chains and financial operations. It is redefining personal digital assistance, morphing reactive tools into proactive companions that anticipate our needs. In customer support, agentic AI is elevating interactions from frustratingly basic to empathetically effective. For software developers, these agents are becoming indispensable collaborators, accelerating innovation and streamlining the creation process. And across industries, they serve as intelligent orchestrators, weaving together disparate systems into coherent, responsive workflows.

The landscape of tools available to build these agents – from the versatile LangChain and the ambitious Auto-GPT to the collaborative CrewAI and MetaGPT – empowers developers and researchers to push the boundaries of what is possible. These frameworks are democratizing access to agentic capabilities, fostering a vibrant ecosystem of innovation. Explore our curated list of AI tools for productivity and automation shaping the 2025 landscape.

However, as we embrace the profound benefits – the enhanced productivity, the data-driven insights, the hyper-personalization, and the sheer scalability – we must also navigate the significant limitations and challenges with wisdom and foresight. The concerns around safety, control, alignment, bias, security, and the broader socioeconomic impact are not trivial. They demand ongoing research, robust ethical frameworks, transparent governance, and a collective commitment to responsible development. The power of agentic AI comes with an inherent responsibility to ensure it serves humanity’s best interests.

The future trajectory points towards even more specialized, personalized, and collaborative agents, deeply integrated with robotics and the IoT, and playing an increasingly significant role in the quest for Artificial General Intelligence. See how these align with top SaaS trends to watch in 2025. The road ahead is one of immense potential, promising solutions to some of our most complex problems and new paradigms for human-AI partnership.

Ultimately, understanding agentic AI in 2025 is about recognizing a pivotal shift in our relationship with technology. It’s about moving from instructing machines to collaborating with intelligent entities. As these autonomous AI agents become more capable and ubiquitous, our ability to design them thoughtfully, deploy them responsibly, and adapt to their presence will define the next chapter of technological and societal evolution. The agentic future is not just coming; it is here, and it invites us all to participate in shaping its course. Subscribe to our newsletter to stay ahead on emerging AI developments.